Suppose you are a C++ programmer on a project and you have the best intentions to write really good code. The one fellow that you better make friends with is your compiler. He will support you in your efforts whenever he can. Unless you don’t let him. One sure way to reject his help is to switch off all compiler warnings. I know it should be well-known by now that compiling at high warning levels is something to do always and anytime but it seems that many people just don’t do it.

Taking g++ as example, high warning levels do not mean just having “-Wall” switched on. Even if its name suggests otherwise, “-Wall” is just the minimum there. If you just spend like 5 minutes or so to look at the man page of g++ you find many many more helpful and reasonable -W… switches. For example (g++-4.3.2):

-Wctor-dtor-privacy: Warn when a class seems unusable because all the constructors or destructors in that class are private, and it has neither friends nor public static member functions.

Cool stuff! Let’s what else is there:

-Woverloaded-virtual: Warn when a function declaration hides virtual functions from a base class. Example:

class Base

{

public:

virtual void myFunction();

};

class Subclass : public Base

{

public:

void myFunction() const;

};

I would certainly like to be warned about that, but may be that’s just me.

-Weffc++: Warn about violations of the following style guidelines from Scott Meyers’ Effective C++ book

This is certainly one of the most “effective” weapons in your fight for bug-free software. It causes the compiler to spit out warnings about code issues that can lead to subtle and hard-to-find bugs but also about things that are considered good programming practice.

So suppose you read the g++ man page, you enabled all warning switches additional to “-Wall” that seem reasonable to you and you plan to compile your project cleanly without warnings. Unfortunately, chances are quite high that your effort will be instantly thwarted by third-party libraries that your code includes. Because even if your code is clean and shiny, header files of third-pary library may be not. Especially with “-Weffc++” this could result in a massive amount of warning messages in code that you have no control of. Even with the otherwise powerful, easy-to-use and supposedly mature Qt library you run into that problem. Compiling code that includes Qt headers like <QComboBox>with “-Weffc++” switched on is just unbearable.

Leaving aside the fact that my confidence in Qt has declined considerably since I noticed this, the question remains what to do ignore the shortcomings of other peoples code. With GCC you can for example add pragmas around offending includes as desribed here. Or you can create wrapper headers for third-party includes that contain

#pragma GCC system_header

AFAIK Microsoft’s compilers have similar pragmas:

#pragma warning(push, 1)

#include

#include

#pragma warning(pop)

Warning switches are a powerful tool to increase your code quality. You just have to use them!

In my rare spare time, I hold lectures on software engineering at the

In my rare spare time, I hold lectures on software engineering at the

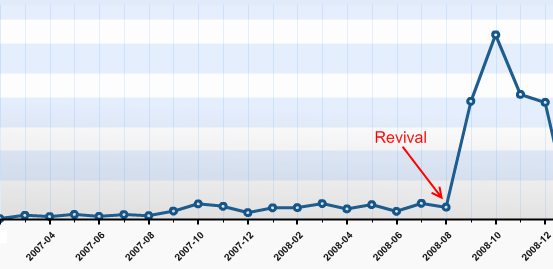

We started this blog in February 2007. Soon afterwards, it was nearly dead, as no new articles were written. Why? We would have answered to “be under pressure” and “have more relevant things to do” or simply “have no idea about what to write”. The truth is that we didn’t regard this blog as being important to us. We didn’t allocate any ressources, be it time, topics or attention to it. Seeming unimportant is a sure death cause for any business resource in any mindful managed company.

We started this blog in February 2007. Soon afterwards, it was nearly dead, as no new articles were written. Why? We would have answered to “be under pressure” and “have more relevant things to do” or simply “have no idea about what to write”. The truth is that we didn’t regard this blog as being important to us. We didn’t allocate any ressources, be it time, topics or attention to it. Seeming unimportant is a sure death cause for any business resource in any mindful managed company.