A good book about leader mentality is “Leaders Eat Last” from Simon Sinek. The book is not about your diet, but your approach towards your subordinates and your peer group.

I don’t want to recapitulate the content of the book – it is worth the read or at least a presentation about it. I want to talk about one specific implementation of the principle in my company that I did even before reading the book, but could only name and highlight after Simon Sinek lend me his analogy.

I’m a software developer and founded a software development company. I hired other software developers and they develop software with me. I might be the founder, owner and director of the company (so, in a short team, the “leader”), but I’m still a fellow developer and understand the developer’s mindset. So I know what a developer wants, because I want it, too.

Except, I make sure that I’m the last one in the company to get it.

Two examples:

We bought our second round of office desks in 2010, when we moved into a new office. They were still traditional desks that could only be height-adjusted with tremenduous effort. We only did it once and settled for “good enough”. Our first electrically height adjustable desk was bought in 2013 because of a specific medical requirement. But it opened the door to the possibility of having the desk at any height throughout the day. You might even work standing up.

We slowly accumulated more electrically height adjustable desks until we had 50 percent classic and 50 percent electric desks. At that point, I bought the other half at once (and they are fancy “gamer nerd” desks, because why not?). The last classic desk in the company was my own. I replaced it with the oldest electric desk in the portfolio. Now I can work while standing up, too.

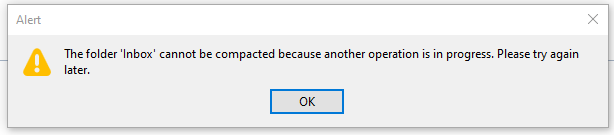

When the Corona pandemic hit in 2020, we moved to home offices all of a sudden. I wrote about this change several times on this blog. This physical separation led to an increased demand for video calls. I made sure everyone is covered with the basic equipment (webcam, headphones, etc.), including me. But I also experimented with the concept of a “virtual office”. It consisted of a video meeting room that I hung out in all workday. I turned the camera and microphone off, but was instantly present if somebody had a desire to talk to me – just like in the real office. For this use case, I installed an additional monitor on my setup, the fourth one, called the “pandemic display” in a blog post about it. Because I didn’t know if the experiment would work, I bought the smallest and cheapest display available for me.

The experiment went fine and I decided to equip everyone with an additional “videoconference display”. The new models were bigger and better. If an employee didn’t see the benefit of it, I didn’t force them to install one in their home office, but every workplace in the office has at least four monitors. Guess were the original one is still installed? I made sure everybody had a better monitor than me.

With this process, I can guarantee that my employees have the work equipment that is good enough for their boss. Because I have it too – or something inferior. If I feel the need to upgrade my gear, I upgrade everybody else and then lift my things to their level. If I feel comfortable with my gear, so does everybody else (except for individual demands and we have a process installed for that, too).

I love self-regulating systems and this is one: The whole company is equipped in a manner that is sufficient or even better for me to do the work. If I want more or better things, everybody gets the upgrade before me because only then do I allow myself to have the same luxury. No “upward” exception for the boss, and only temporarily “downwards”. My wants and needs define the lower limit of equipment quality for all of us. If I can’t buy it for everyone, I don’t buy it.

That is the whole trick: Equip yourself last or lowest. You can be sure everybody is well-equipped that way. Thanks, Simon!