The other day I was browsing through the C++ API code of a third-party library. I was not much surprised to see stuff like

#define MAX(a, b) ( (a) >= (b) ? (a) : (b)) #define MIN(a, b) ( (a) <= (b) ? (a) : (b))

because despite the fact that std::min, std::max together with the rest of the C++ standard library is around for quite a while now, you still come across old fashioned code like above frequently. But things got worse:

#define FALSE 0 #define TRUE 1

and later:

... bool someVariable = TRUE;

As if they learned only half the story about the bool type in C++. But there was more to come:

class ListItem

{

ListItem* next;

ListItem* previous;

...

};

class List : private ListItem

{

...

};

Yes, that’s right, the API guys created their own linked-list implementation. And a pretty weird one, too, mixing templates with void* pointers to hold the contents. Now, why on earth would you do that when you could just use std::list or std::vector? Makes you wonder about the quality of the rest of the code. Especially with C++ where there are so many little pitfalls and details which can burn you. Hey, if you have no clue about the very basics of a language, leave it alone!

Unfortunately, the above example is not exceptional in industry software. It seems that the C++ world these days is actually split into two worlds. In one, people like Andrei Alexandrescu write great books about Modern C++ design, Scott Meyers gives talks about Effective C++ and the boost guys introduce the next library using even more creative operator overloading that in the spirit library (which is pretty cool stuff, btw).

In the other world, you could easily call it industry reality, people barely know the STL, don’t use templates at all, or fall for misleading and dangerous c++ features like the throw() clause in method signatures. Or they ban certain c++ features because they are supposedly not easy to understand for the new guy on the project or are less readable in general. Take for example the Google C++ Style Guide. They don’t even allow exceptions, or the use of std::auto_ptr. Their take on the boost library is that “some of the libraries encourage … an excessively “functional” style of programming”. What exactly is bad about piece of functional programming used as the right tool in the right place? And what communicates ownership issues better than e.g. returning a heap allocated object using a std::auto_ptr?

The no-exceptions rule is also only partly understandable. Sure enough, exceptions increase code complexity in C++ more than in other languages (read Items 18 and 19 of Herb Sutter’s Exceptional C++ as an eye-opener. Or look here). But IMHO their advantages still outweigh their downsides.

With the upcoming new C++0X standard my guess is that the situation will not get any better, to put it mildly. Most likely, things like type inference with the new auto keyword will sell big because they save typing effort. Same thing with the long overdue feature of constructor delegation. But why would people who find functional programming less readable start to use lambda functions? As little known as the explicit keyword is now, how many people will know about or actually use the new “= delete” keyword, let alone “= default“? Maybe I’m a little too pessimistic here but I will certainly put a mark in my calender on the day I encounter the first concept definition in some piece of industry C++ software.

Update: Concepts have been removed from C++0X so that mark in my calender will not come any time soon…

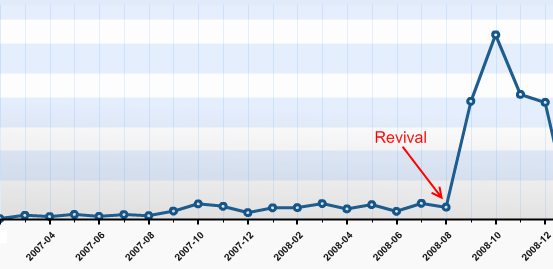

We started this blog in February 2007. Soon afterwards, it was nearly dead, as no new articles were written. Why? We would have answered to “be under pressure” and “have more relevant things to do” or simply “have no idea about what to write”. The truth is that we didn’t regard this blog as being important to us. We didn’t allocate any ressources, be it time, topics or attention to it. Seeming unimportant is a sure death cause for any business resource in any mindful managed company.

We started this blog in February 2007. Soon afterwards, it was nearly dead, as no new articles were written. Why? We would have answered to “be under pressure” and “have more relevant things to do” or simply “have no idea about what to write”. The truth is that we didn’t regard this blog as being important to us. We didn’t allocate any ressources, be it time, topics or attention to it. Seeming unimportant is a sure death cause for any business resource in any mindful managed company.