A code design pattern I’ve used a lot in recent times is the “optional-based polymorphism” that looks like a delegation to another type that might not be available. It might be an implementation of the FCoI-principle (Favour Composition over Inheritance).

Let’s look at an example: An application has several different engines that move stuff around. Some engines are based on limit switches. They move until they are stopped by a physical switch. The application can make these engines move from one predefined position to the next, but not anywhere in between. Another type of engines is based on a relative position. You give the engine the new target position and it positions itself there, without any limit switches or predefined positions.

Traditional approach

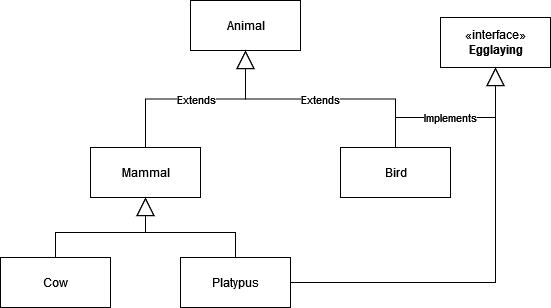

A typical implementation using inheritance would be a common supertype “Engine” that provides the functionality both engine types exhibit. From there, we would define two subtypes that extend the functionality in their desired way. One subtype would be the “LimitSwitchEngine”, the other one the “PositionableEngine”.

Our client code that wants to use a particular engine has two possibilities: It only requires the common functionality of an engine and can work with the supertype. Or it needs to perform a downcast after checking the actual type of the engine.

Cast methods

The optional-based polymorphism guides the client code towards the specific subtype by providing all possibilities in the common interface:

public interface Engine {

/* Common functionality */

boolean isMoving();

void emergencyStop();

/* optional-based polymorphism */

Optional<LimitSwitchEngine> boundToLimitSwitches();

Optional<PositionableEngine> freelyPositionable();

}The client code uses the Engine’s interface only as a stepping stone for the specific engine that is required for your use case. If the engine object cannot provide that functionality, you’ll get an empty Optional. Else you retrieve your reference to the specific type and work with it.

Disadvantages

One disadvantage of this approach is the fact that the supertype is aware and even dependent on the different subtypes. You limit the scope of your type hierarchy to the types offered in the “entrance interface”. You can still use the traditional downcast way as described in the introduction for all other types, but that separates them into “featured” and “non-featured” subtypes. So this approach will violate the Open/Closed principle by not being open to extension without modification.

Another disadvantage is that your typical navigation in the IDE doesn’t work as well anymore. If you want to know about all the different types of engines in the system, you can’t just look at the type hierarchy of the Engine type anymore. This is because of the first advantage this pattern brings:

Advantages

Not only gets this style rid of the downcast, it frees your type system up in two different dimensions: The LimitSwitchEngine and PositionableEngine don’t need to be subtypes of Engine. They can be totally independent types with no real connection to the Engine. And they can be different instances. Of course, there is no need to use any of these freedoms. You can still inherit PositionableEngine from Engine and implement both types in the same object. But it isn’t mandatory anymore.

Another advantage is discoverability. Your typical type hierarchy lookup in the IDE is replaced with code completion lookup. If you get the names right, this pattern feels like writing code on rails, because your code completion proposals will lead you to the correct place.

Your opinion

What is your opinion on this pattern? What would you expect from a code design that provides those “casting” methods? Tell us in the comments!