It has become somewhat of an internal meme that I do not like it when programmers use the word “wrapper”. When someone does say it, I usually get a cue from one of the others to start complaining about it. Do not get me wrong, though. I am very much in favor of wrapping things, but with purpose. And my favorite one is the façade.

When simple becomes complex

Many times, APIs start out simple and elegant. This usually works for a while and the API gets used a lot precisely because of its beauty and simplicity. But eventually, a new use case comes along that demands more of the API than it can currently serve. It has to be extended. This usually takes the form of an additional method or function parameter, or an additional function that needs to be called. Using the API now becomes more complex all its users.

Do not underestimate this effect. I have only anecdotal evidence, but in my experience, a lot of unnecessary software complexity can be attributed to this1. The Pareto-Principle applies here: A single use case causes all the users of the previously simple API to deal with new complexity (e.g. 10% of the use cases cause 90% of the complexity in the user-/call-sites).

Façades make it look beautiful

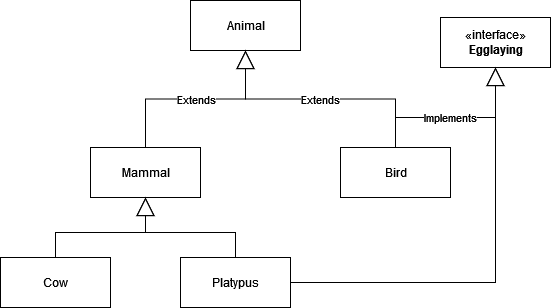

Luckily, it can be dealt with beautifully: using the façade pattern. This pattern abstracts a complex API behind a simple API. The trade-off, of course, is that it is less powerful than the “full API”. In our example though, all of the previous use-cases can keep using the simple API via a façade.

When to apply this

The aforementioned example, extending an API, is a very nice opportunity to apply the façade. Just keep the interface of the old API around, and re-implement it using the new, extended API, which is usually created by modifying the old API’s implementation. Now all the old call-sites can stay the same, yet you can have a more powerful API for those rare cases that need it.

Of course, you can also identify common usage patterns and refactor them using a façade, but that’s usually much harder to do.

What exactly are façades made of?

Façades do not hide the more complex API in the sense that the APIs users are not allowed to use it. Yes, façades make APIs look beautiful, but that is where the metaphor ends. You can still access what is behind the façade. You can even write more façades for the behind. Many APIs have multiple common cases and only very few complex ones.

So… Classes? Functions? Data? Any of those, in fact. Whenever you enable writing something in a simpler way for a common case, you have a façade . Very often, a small function with a simple signature is all the façade you need.

But it makes all the difference.

Now can someone please tell me what that little hook under the c is called?

- Façades can, of course, also contribute to creating complexity by growing the codebase and creating ‘variants’. But they rarely do. ↩︎