Given a floating-point number x, it is quite easy to square it: x = x * x;, or x *= x;. Similarly, to find its cube, you can use x = x * x * x;.

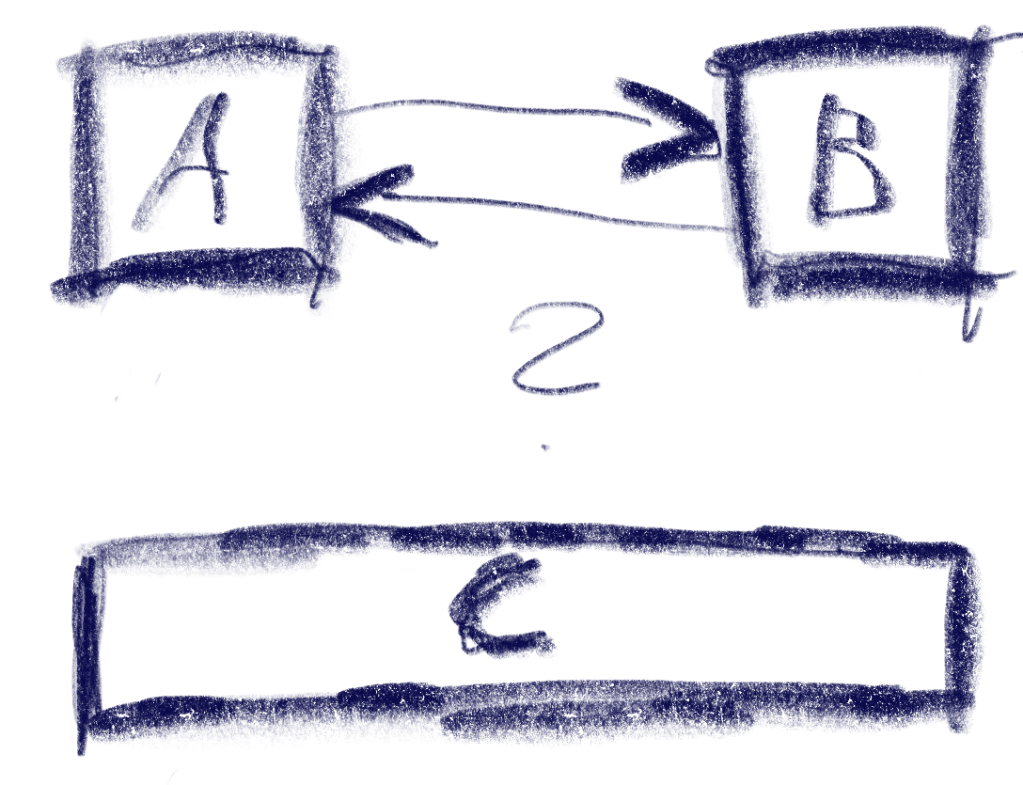

However, when raising it to the 4’th power, things get more interesting: There’s the naive way: x = x * x * x * x;. And the slightly obscure way x *= x; x *= x; which saves a multiplication.

When raining to the 8’th power, the naive way really loses its appeal: x = x * x * x * x * x * x * x * x; versus x *= x; x *= x; x *= x;, that’s 7 multiplications version just 3. This process can easily be extended for raising a number to any power-of-two N, and will only use O(log(n)) multiplications.

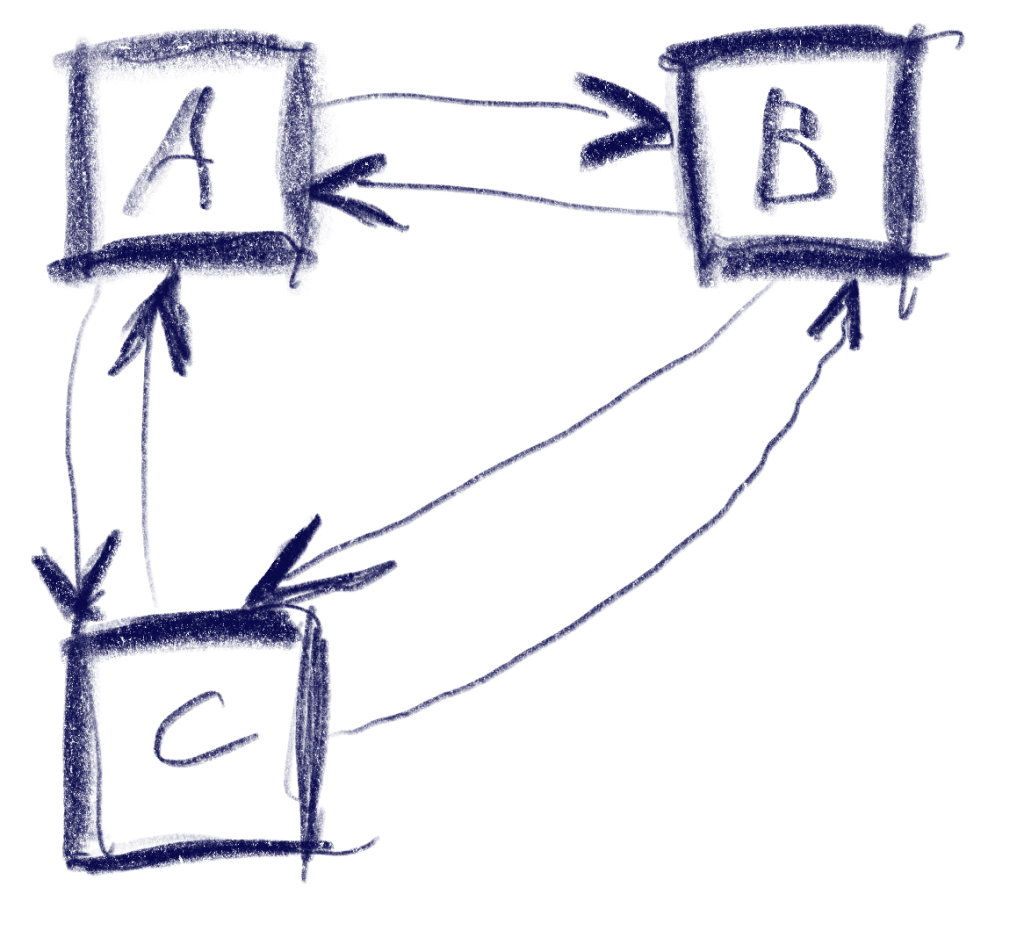

The algorithm can also easily be extended to work with any integer power. This works by decomposing the number into product of power-of-twos. Luckily, that’s exactly what the binary representation so readily available on any computer is. For example, let us try x to the power of 20. That’s 16+4, i.e. 10100 in binary.

x *= x; // x is the original x^2 after this

x *= x; // x is the original x^4 after this

result = x;

x *= x; // x is the original x^8 after this

x *= x; // x is the original x^16 after this

result *= x;Now let us throw this into some C++ code, with the power being a constant. That way, the optimizer can take out all the loops and generate just the optimal sequence of multiplications when the power is known at compile time.

template <unsigned int y> float nth_power(float x)

{

auto p = y;

auto result = ((p & 1) != 0) ? x : 1.f;

while(p > 0)

{

x *= x;

p = p >> 1;

if ((p & 1) != 0)

result *= x;

}

return result;

}Interestingly, the big compilers do a very different job optimizing this. GCC optimizes out the loops with -O2 exactly up to nth_power<15>, but continues to do so with -O3 on higher powers. clang reliably takes out the loops even with just -O2. MSVC doesn’t seem to eliminate the loops at all, nor does it remove the multiplication with 1.f if the lowest bit is not set. Let me know if you find an implementation that MSVC can optimize! All tested on the compiler explorer godbolt.org.