I hope we all agree that emitting null values is a hostile move. If you are not convinced, please ask the inventor of the null pointer, Sir Tony Hoare. Or just listen to him giving you an elaborate answer to your question:

https://www.infoq.com/presentations/Null-References-The-Billion-Dollar-Mistake-Tony-Hoare/

So, every time you pass a null value across your code’s boundary, you essentially outsource a problem to somebody else. And even worse, you multiply the problem, because every client of yours needs to deal with it.

But what about the entries to your functionality? The parameters of your methods? If somebody passes null into your code, it’s clearly their fault, right?

Let’s look at an example of pdfbox, a java library that deals with the PDF file format. If you want to merge two or more PDF documents together, you might write code like this:

File left = new File("C:/temp/document1.pdf");

File right = new File("C:/temp/document2.pdf");

PDFMergerUtility merger = new PDFMergerUtility();

merger.setDestinationFileName("C:/temp/combined.pdf");

merger.addSource(left);

merger.addSource(right);

merger.mergeDocuments(null);

If you copy this code verbatim, please be aware that proper exception and resource handling is missing here. But that’s not the point of this blog entry. Instead, I want you to look at the last line, especially the parameter. It is a null pointer and it was my decision to pass it here. Or was it really?

If you look at the Javadoc of the method, you’ll notice that it expects a StreamCacheCreateFunction type, or “a function to create an instance of a stream cache”. If you don’t want to be specific, they tell you that “in case of null unrestricted main memory is used”.

Well, in our example code above, we don’t have the necessity to be specific about a stream cache. We could implement our own UnrestrictedMainMemoryStreamCacheCreator, but it would just add cognitive load on the next reader and don’t provide any benefit. So, we decide to use the convenience value of null and don’t overthink the situation.

But that’s the same as emitting null from your code over a boundary, just in the other direction. We use null as a way to communicate a standard behaviour here. And that’s deeply flawed, because null is not standard and it is not convenient.

Offering an interface that encourages clients to use null for convience or abbreviation purposes should be considered just as hostile as returning null in case of errors or “non-results”.

How could this situation be defused by the API author? Two simple solutions come to mind:

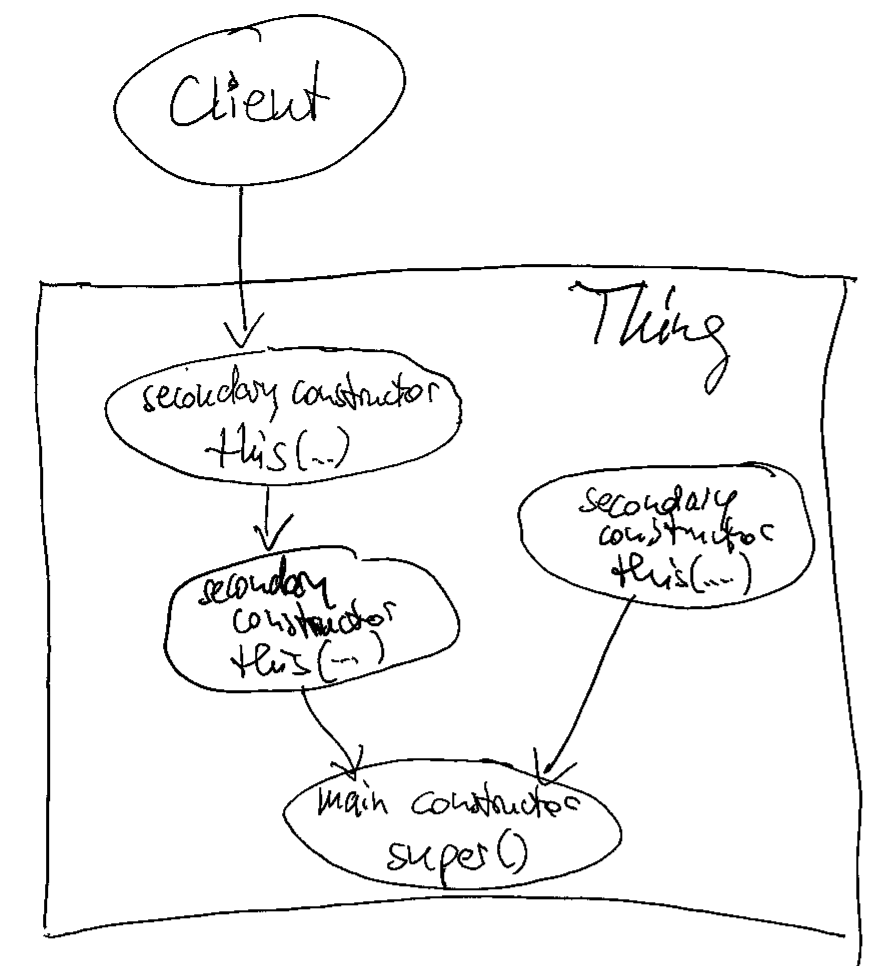

- There could be a parameter-less method that internally delegates to the parameterized one, using the convenient null value. This way, my client code stays clear from null values and states its intent without magic numbers, whereas the implementation is free to work with null internally. Working with null is not that big of a problem, as long as it doesn’t pass a boundary. The internal workings of a code entity is of nobody’s concern as long as it isn’t visible from the outside.

- Or we could define the parameter as optional. I mean in the sense of Optional<StreamCacheCreateFunction>. It replaces null with Optional.empty(), which is still a bit weird (why would I pass an empty box to a code entity?), but communicates the situation better than before.

Of course, the library could also offer a variety of useful standard implementations for that interface, but that would essentially be the same solution as the self-written implementation, minus the coding effort.

In summary, every occurrence of a null pointer should be treated as toxic. If you handle toxic material inside your code entity without spilling it, that’s on you. If somebody spills toxic material as a result of a method call, that’s an hostile act.

But inviting your clients to use toxic material for convenience should be considered as an hostile attitude, too. It normalizes harmful behaviour and leads to a careless usage of the most dangerous pointer value in existence.